Contents

Neuromorphic engineering is focused on developing computer hardware and software systems that mimic the structure, function, and behavior of the human brain. The goal of neuromorphic engineering is to create computing systems that are much more energy-efficient, scalable, and adaptive than conventional computer systems, and that can solve complex problems in a manner that is similar to how the brain solves problems.

Key challenges in neuromorphic engineering include developing algorithms and hardware that can perform complex computations using very little energy, creating systems that can learn and adapt over time, and developing methods for controlling the behavior of artificial neurons and synapses in real-time.

Neuromorphic engineering and neuromorphic computing are related but distinct concepts. Neuromorphic computing is a specific application of neuromorphic engineering. It involves the use of hardware and software systems that are designed to process information in a manner that is similar to how the human brain processes information.

The Human Brain vs. Computers

One of the major obstacles in creating brain-inspired computing systems is the vast complexity of the human brain. Unlike traditional computers, the brain operates as a nonlinear dynamic system that can handle massive amounts of data through various input channels, filter information, store key information in short- and long-term memory, learn by analyzing incoming and stored data, make decisions in a constantly changing environment, and do all of this while consuming very little power.

The brain’s massive interconnectivity, redundancy, local activity, intricate logic, and functional non-linearity make it a formidable computing machine that is difficult to replicate in a computer chip. The human brain’s ability to process and store vast amounts of information remains one of the greatest mysteries of science. Understanding how activity within neuronal circuits gives rise to higher cognitive processes such as language, emotions and consciousness is not understood yet and fully understanding how the brain works will require major technological breakthroughs.

The human brain is made up of hundreds of billions of cells, including tens of thousands of different types of nerve cells, or neurons. Each neuron may be connected to up to 10 000 other neurons, passing signals to each other via as many as one quadrillion (1015) synapses. This intricate network gives rise to the brain’s multiple, interacting levels of complexity.

Neurons are unique in the sense that they have a specific combination of genetic information, shape, and connectivity that distinguish them from other neurons. The pattern of genes a neuron expresses contributes to its overall function and characteristics, such as the types of neurotransmitters it releases or the types of signals it can receive. The shape of a neuron, including the number and distribution of its dendrites and axons, also influences its function. The connections a neuron makes with other cells, such as other neurons or muscle cells, form the basis for communication and information transfer in the nervous system. All these factors contribute to the overall complexity of the brain.

The human brain is often compared to a computer due to its ability to process and store vast amounts of information. However, there are several key differences between the human brain and conventional computers that set them apart. These differences can be grouped into three broad categories: power consumption, fault tolerance, and software-free operation.

First, the human brain consumes much less power compared to conventional computers. Despite its incredibly complex structure and vast computing capabilities, the human brain requires only about 10-25 Watts of power – as much as a household light bulb. This is in stark contrast to conventional computers, which typically consume hundreds or even thousands of times more power.

Second, the human brain is highly fault-tolerant, meaning that it can continue to operate even if some of its components fail. This is due to the brain’s highly distributed and redundant architecture, which ensures that multiple areas can compensate for the loss of function in one area. This level of fault tolerance is not found in conventional computers, which typically rely on backup systems and fail-safes to prevent data loss in the event of a failure.

Finally, the human brain seems to operate without the need for software, as it can learn and process information through its own internal processes. Conventional computers, on the other hand, require software to perform any computational task. This software-free operation of the human brain is a fundamental aspect of its functionality, and it is one of the key areas of focus for neuromorphic engineers who are developing brain-inspired computing systems.

Artificial Neural Networks

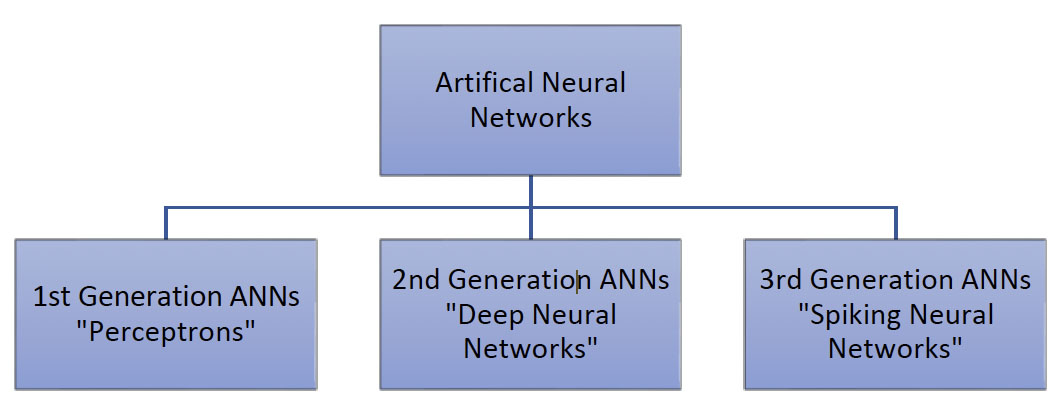

An Artificial Neural Network (ANN) is a combination and collection of nodes that are inspired by the biological human brain. The objective of ANN is to perform cognitive functions such as problem-solving and machine learning. ANNs can be separated into three generations based on their computational units and performance.

The 3rd generation Spiking Neural Networks (SNNs) are biologically inspired structures where information is represented as binary events (spikes). In SNNs, artificial neurons communicate with each other by sending spikes, or brief pulses of activity, instead of continuous signals. This mimics the way that biological neurons communicate with each other through spikes of electrical activity. SNNs are independent of the clock-cycle based fire mechanism. They do give an output (spike) if the neurons collect enough data to surpass the internal threshold. Moreover, neuron structures can work in parallel. In theory, thanks to these two features SNNs consume less energy and work faster than second-generation ANNs.

In addition, SNNs can process information in a more event-driven manner, which makes them well-suited for processing time-varying inputs such as sensory data from cameras or microphones. SNNs can also handle incomplete or noisy information more effectively than traditional artificial neural networks, making them well-suited for applications in noisy and uncertain environments.

In the field of neuromorphic computing, ANNs play an important role in emulating the behavior and functionality of biological neurons and synapses by offering a mathematical framework for modeling complex nonlinear relationships between inputs and outputs.

By using ANNs, neuromorphic computing systems can perform a wide range of tasks, including image and speech recognition, natural language processing, and control and decision making. Additionally, ANNs can be implemented in hardware or software, allowing for a range of implementations from small low-power sensors to high-performance computing systems.

What is Neuromorphic Computing?

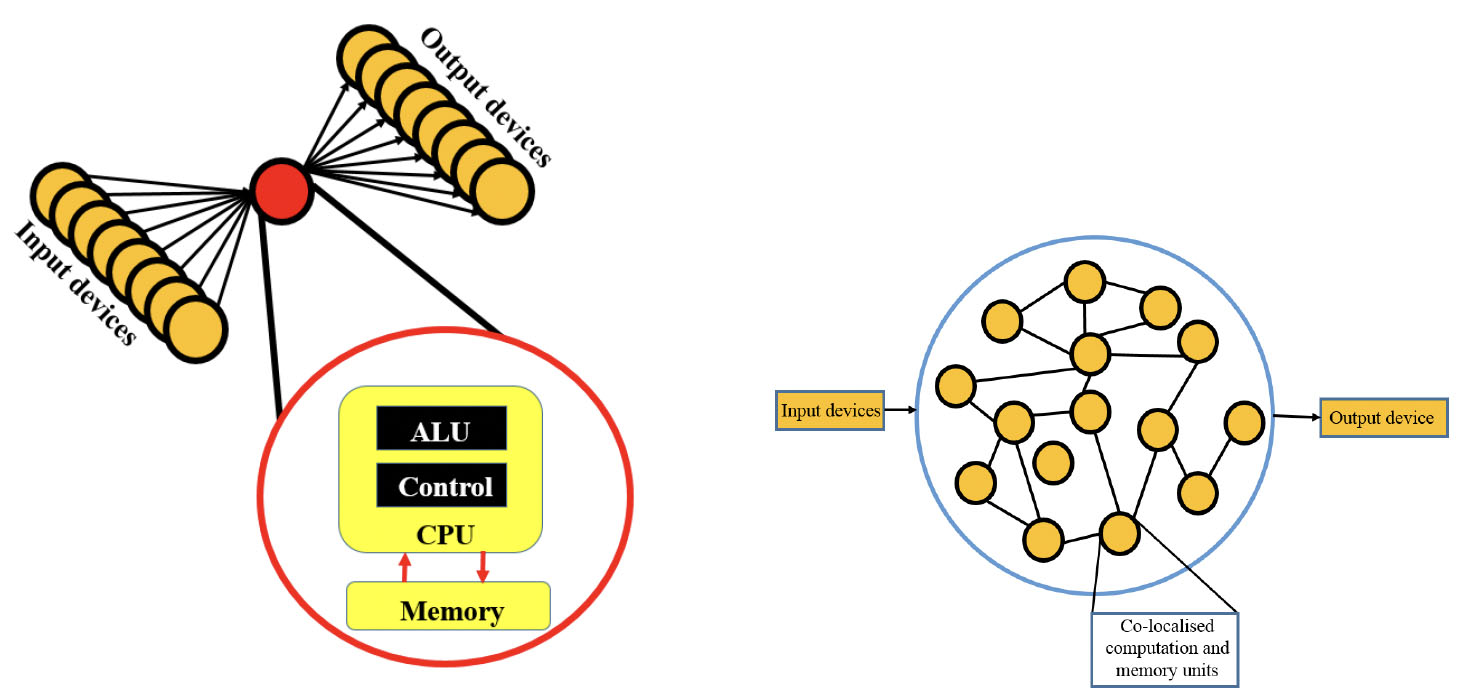

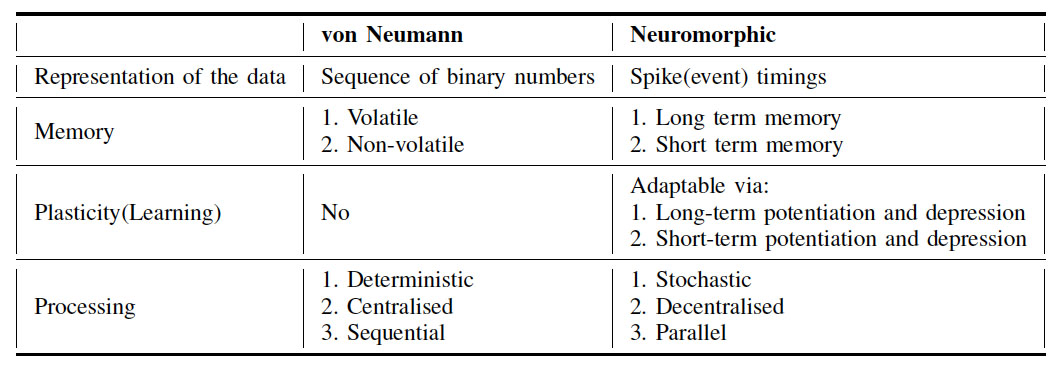

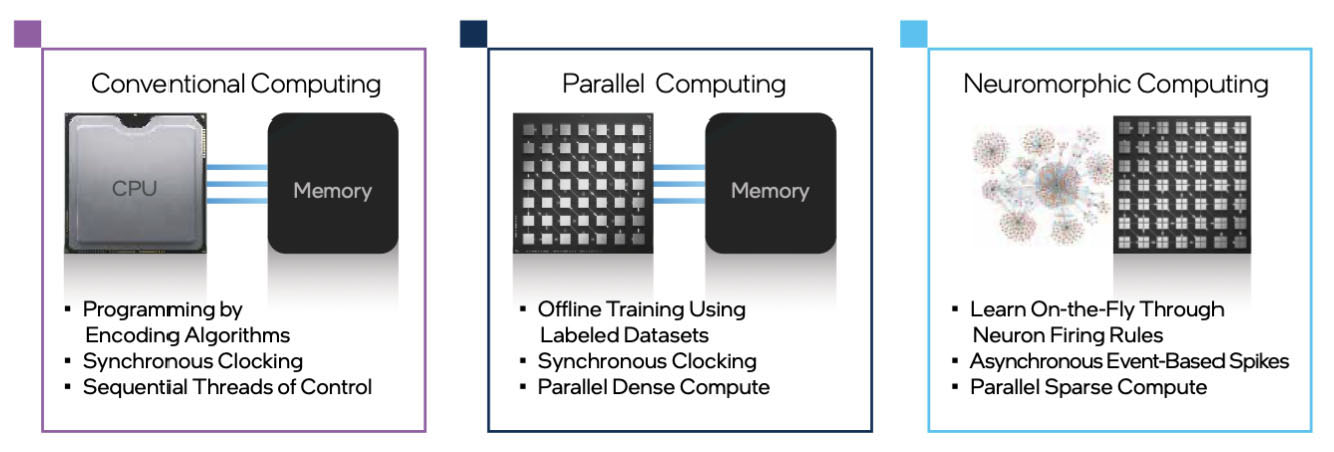

This is unlike traditional computing, which is based on von Neumann systems consisting of three different units: processing unit, I/O unit, and storage unit. This stored program architecture is a model for designing computers that uses a single memory to store both data and instructions, and a central processing unit to execute those instructions. This design, first proposed by mathematician and computer scientist John von Neumann, is widely used in modern computers and is considered to be the standard architecture for computer systems and relies on a clear distinction between memory and processing.

However, neural networks are data-centric; most of the computations are based on the dataflow and the constant shuffling between the processing unit and the storage unit creates a bottleneck. Since data needs to be processed in sequential order, the bottleneck causes rigidity. This bottleneck leads to rigidity in the architecture as the data needs to pass through the bottleneck in a sequential order.

One of the critical aspects of any neuromorphic effort is the design of artificial synapses. To achieve the goal of scaling neuromorphic circuits towards the level of the human brain, it is necessary to develop nanoscale, low-power synapse-like devices.

By implementing neuromorphic principles such as spiking, plasticity, dynamic learning and adaptability, neuromorphic computing aspires to move away from the bit-precise computing paradigm towards the probabilistic models of simple, reliable and power and data efficient computing.

Neuromorphic Hardware

Neuromorphic hardware is a type of computer hardware that is specifically designed to mimic the structure and function of the human brain and benefit from Spiking Neural Networks.

This hardware can be used to create brain-inspired computing systems that are more energy-efficient and scalable than traditional computing systems.

In July 2019, the U.S. Air Force Research Laboratory, in partnership with IBM, unveiled the world’s largest neuromorphic digital synaptic supercomputer dubbed Blue Raven. The system delivers the equivalent of 64 million neurons and 16 billion synapses of processing power while only consuming 40 watts.

All three systems utilize conventional CMOS technology, but they have different design approaches. SpiNNaker is made up of a large number of small, embedded processors connected through a custom packet-switched network. This network is optimized for brain modeling applications and allows for large-scale SNNs to run in real-time. BrainScaleS, on the other hand, uses analog circuits that run 10 000 times faster than biology to model neurons, and is optimized for experiments with accelerated learning. Loihi sits in between these two systems, using a large array of asynchronous digital hardware engines to model and run faster than biological real-time. The primary goal of Loihi is to accelerate research and help commercialize future neuromorphic technology.

The Role of Memristors in Neuromorphic Engineering

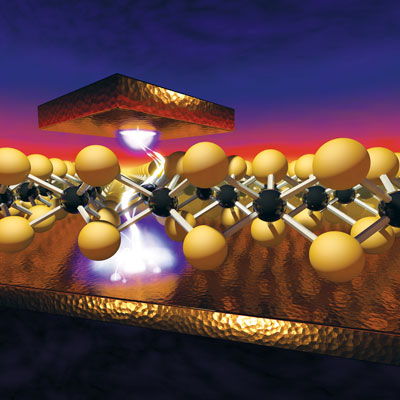

Memristors are two-terminal electric elements similar to a conventional resistor – however, the electric resistance in a memristor is dependent on the charge passing through it; which means that its conductance can be precisely modulated by charge or flux through it. Its special property is that its resistance can be programmed (resistor function) and subsequently remains stored (memory function).

In this sense, a memristor is similar to a synapse in the human brain because it exhibits the same switching characteristics, i.e., it is able, with a high level of plasticity, to modify the efficiency of signal transfer between neurons under the influence of the transfer itself. That’s why researchers are hopeful to use memristors for the fabrication of electronic synapses for neuromorphic (i.e., brain-like) computing that mimics some of the aspects of learning and computation in human brains.

Despite their technological advancements, the underlying physics behind the ultra-fast resistive switching in these atomically thin devices is not yet well understood.

Application Areas for Neuromorphic Engineering

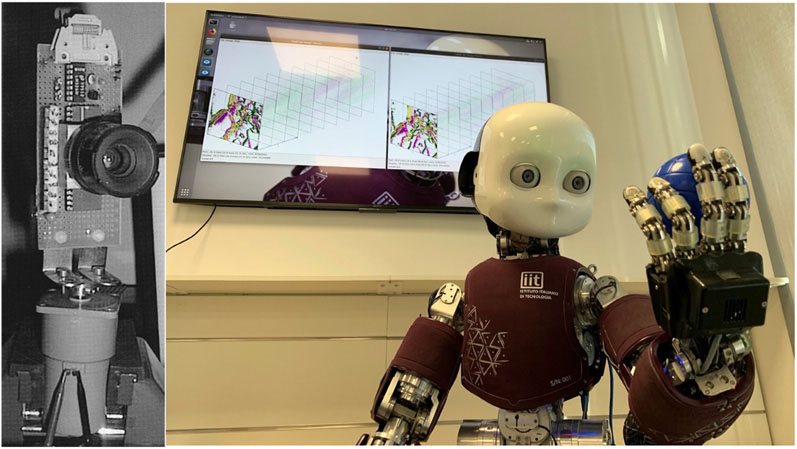

Robotics: Robotics can benefit from neuromorphic computing by having more efficient processing, real-time responsiveness, and dynamic adaptability. Neuromorphic systems are designed to operate in real-time and use minimal power, making them ideal for robotic applications where power and processing resources are limited. They can process sensory data as it arrives, allowing robots to respond in real-time to changing environments. Neuromorphic systems can also learn and adapt, which can improve a robot’s ability to perform tasks in complex and unpredictable environments.

Self-driving cars: It has become clear to engineers that simply making incremental improvements to current technologies will not be enough to achieve the goal of truly autonomous vehicles. Neuromorphic engineering, with its unique approach to perception, computation, and cognition, offers the necessary breakthroughs to make truly autonomous vehicles a reality. Self-driving cars can benefit from neuromorphic computing by having more efficient processing, real-time responsiveness, and improved decision-making capabilities. Neuromorphic systems can process large amounts of sensory data in real-time and make decisions based on that data in a more efficient manner. Additionally, neuromorphic systems have the ability to learn and adapt, which can enable self-driving cars to continuously improve their decision-making abilities over time.

Olfaction and chemosensation: Machine olfaction, or the use of technology to detect and identify odors, has been a pioneer in using neuromorphic techniques to process and analyze sensory data. This is partly due to the in-depth study of the olfactory system in both experimental and computational neuroscience. To further improve and advance machine olfaction, there is a need for larger sensor arrays and advancements in neuromorphic circuitry to better process the data collected by these sensors. Additionally, the study of the various computational techniques used in these systems may help answer important questions about how and when to adjust the system’s learning capabilities and the impact of local learning rules on the performance of these systems.

Event vision sensors: Event-based vision sensors are inspired by the workings of the human retina and attempt to recreate its processes for acquiring and processing visual information. These sensors can greatly benefit from neuromorphic engineering by allowing for more efficient processing of visual information, improved accuracy, and greater adaptability. Neuromorphic systems can process large amounts of sensory data related to visual events in real-time, making decisions based on that data more efficiently. This can lead to improved accuracy in detecting and recognizing visual information, as well as faster response times. Additionally, neuromorphic systems have the ability to learn and adapt, which can enable event-based vision sensors to continually improve their performance over time. This can result in a more effective and reliable system for detecting and recognizing visual information.

Neuromorphic audition: Neuromorphic hearing technology is influenced by the incredible power of human hearing. The aim of this technology is to match human hearing abilities through the creation of algorithms, hardware, and applications for artificial hearing devices. By incorporating principles of neuromorphic engineering into the design of artificial hearing devices, researchers aim to create systems that can process auditory information in a manner that is similar to the human brain. This can result in more advanced and sophisticated hearing devices that can better understand speech and sounds in a variety of challenging auditory environments. Additionally, the development of neuromorphic hardware and software can allow for more energy-efficient processing of auditory information, making it possible for these devices to be miniaturized and integrated into various applications. Progress in neuromorphic audition critically depends on advances in both silicon technology and algorithmic development.

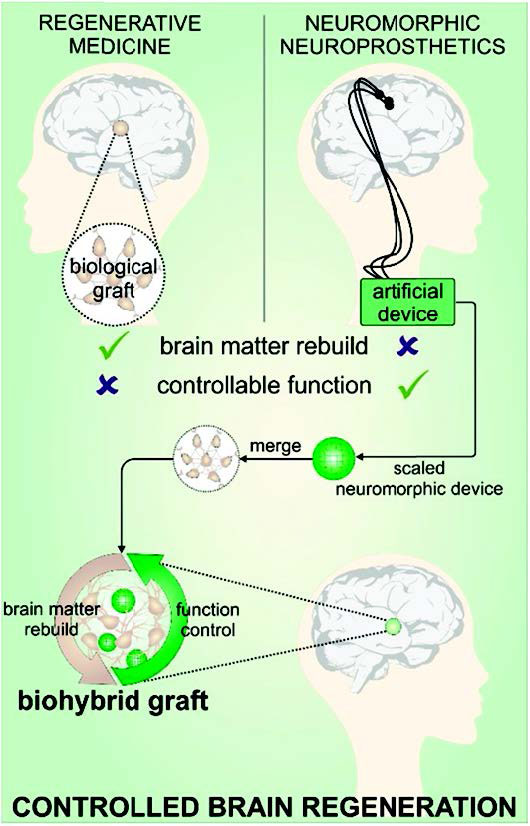

Biohybrid systems for brain repair: Artificial devices have been successfully incorporated within neural spheroids and even inside individual living cells. However, these devices have simple designs, compared to the complexity of a neuromorphic system. To create functional biohybrids, it will require collaboration from different fields to tackle the numerous challenges involved. The rapid advancements in fabrication and miniaturization, energy harvesting, learning algorithms, wireless technology, and biodegradable bioelectronics, suggest that it may soon be possible to develop advanced biohybrid neurotechnology to safely and effectively regenerate the brain.

By incorporating elements of neural networks and artificial intelligence into these systems, it is possible to improve the ability of these systems to interact and interface with the brain in a more natural and intuitive way. This could lead to more effective and efficient rehabilitation strategies for individuals suffering from neurological injuries or disorders.

Embedded devices for neuromorphic time-series: The analysis of time-series data related to humans involves many tasks like understanding speech, detecting keywords, monitoring health, and recognizing human activity. This requires the creation of special devices to help with these tasks. However, there are challenges in processing this type of data for use in these devices, such as cleaning up the raw signals, removing noise, and figuring out the long and short relationships within the data. Already we are seeing commercial off-the-shelf device implementations in the form of fitness monitoring devices, sleep tracking gadgets, and EEG-based brain trauma marker identifying devices. Neuromorphic engineering can be used to develop algorithms that are optimized for analyzing and processing time-variant data. This can lead to improved performance in terms of processing speed and accuracy, as well as reduced power consumption, which is particularly important for wearable devices.

Collaborative autonomous systems: Collaborative Autonomous Systems (CAS) refers to systems that can cooperate among themselves and with humans with variable levels of human intervention (depending on the level of autonomy) in performing complex tasks in unknown environments. An example of CAS would be a fleet of drones working together to survey a large area, or a group of robots performing a task in a factory setting. In these scenarios, the individual systems must be able to communicate with each other and coordinate their actions in order to achieve their goal effectively and efficiently. Other examples of CAS include autonomous vehicles working together in a traffic network, or a group of robots collaborating in a disaster response scenario. The success of CAS requires advanced technologies such as machine learning, artificial intelligence, and networked communication systems.

Conclusion

Neuromorphic computing is a new and exciting field that offers the potential to create computers that are faster, more efficient, and more ‘intelligent’ than traditional computers. While there are still challenges to be addressed, the potential applications of neuromorphic computing are vast and could have a huge impact on a wide range of industries.

One of the main advantages of neuromorphic computing is its efficiency. Unlike traditional computers, which rely on sequential processing, neuromorphic computers can process information in parallel, which makes them much faster and more efficient. Additionally, neuromorphic computers can be more power-efficient than traditional computers because they use less energy to perform tasks.

Another advantage of neuromorphic computing is its ability to process and analyze large amounts of data in real-time. This makes it ideal for use in areas such as image and speech recognition, natural language processing, and autonomous systems. These capabilities could have a huge impact on industries such as healthcare, finance, and transportation, among others.

Despite its potential, there are still several challenges associated with neuromorphic computing that need to be addressed. One of the biggest challenges is the lack of software and hardware support for neuromorphic computing. Currently, there are only a few commercial neuromorphic computing systems available, and they are often expensive and difficult to use.

Another challenge is the lack of a common programming paradigm for neuromorphic computing. Currently, there are many different approaches to programming neuromorphic computers, and there is no standard or widely accepted method for developing software for these systems.